After several tests, now it’s time to summarize the status of the backup policy and to ask the @staff members to contribute the final piece.

As requested and agreed in Slack from the problems we had to put a site back online after Zulip’s group was shut down, we were trying to make sure it doesn’t happen again.

The strategy basically comes down to multiple backups and copies with differentiated access (but avoiding the typical complications that we network administrators tend to face and cause ![]() ). It aims to protect us from technological failure, human failure and human attack/boicot. In our case consist of:

). It aims to protect us from technological failure, human failure and human attack/boicot. In our case consist of:

-

AUTOMATIC daily and full backup of discuss.actualism.online. Seven in total (one for each day of the week) are maintained simultaneously.

-

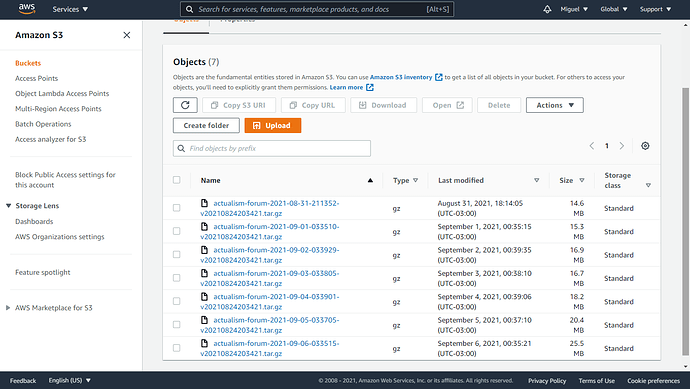

At first they were stored in this server (where Discourse is installed). Now are sent automatically to Amazon S3 (a cloud space as there are so many, but for which Discourse is already preconfigured). Here they are:

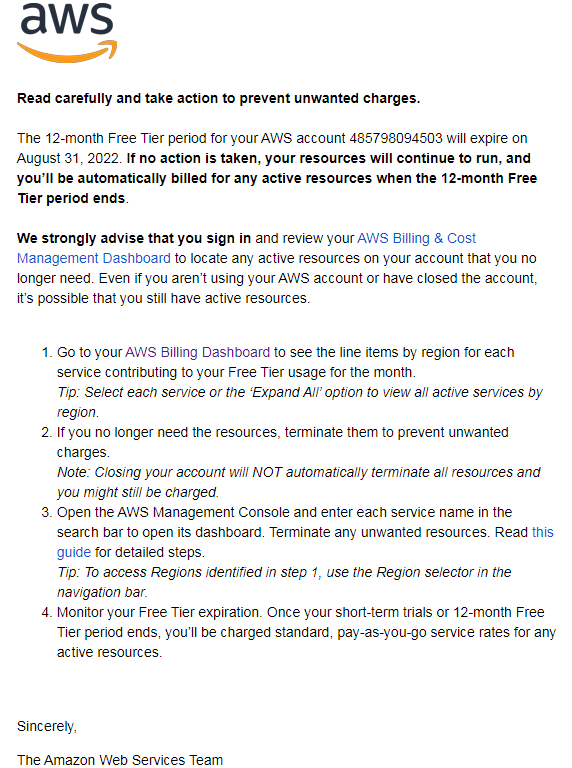

- AUTOMATIC sync between Amazon S3 and cloud personal spaces belonging to each of the @staff members (who will NOT have access to the locations of the other members).

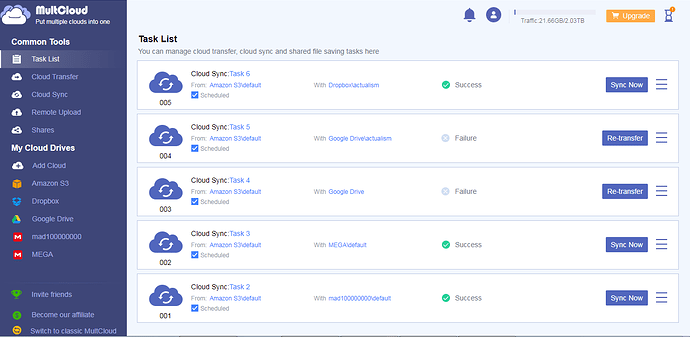

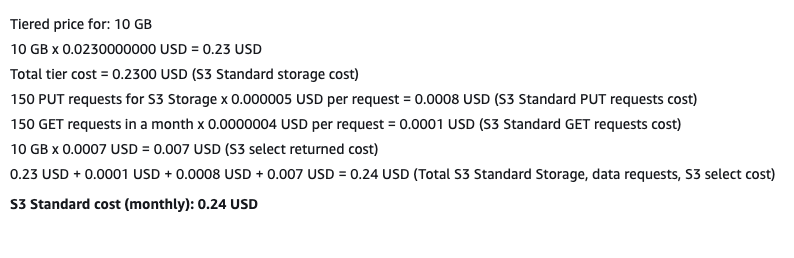

I recommend using MultCloud (https://www.multcloud.com), which offers for free 30GB of data traffic per month and performs this daily synchronization between Amazon S3 and dozens of cloud services of your choice internally, from cloud to cloud, without installing any software on your machines. For now, the 7 backups total only 128MB, so monthly they don’t even reach 4GB of transfer.

Here you can see my account, which synchronizes the Actualist backup on Amazon S3 to two Mega accounts, one Dropbox account and two different locations of the same Google Drive account. I scheduled them 15 minutes apart (the synchronizations are fast). It is advisable to sync it like this, to more than one cloud service, because you can see in the image that the synchronization with Google Drive failed -it’s experiencing problems-).

This scheme has an additional advantage: it will copy, without having to configure anything else later, any additional element that we may include in the Amazon S3 space (for example, the backup that we will soon start to make of actualism.online -which we still don’t bother about, because for now it is only a web page in Wordpress.com-; a plugin that a third party may develop for us; the JSON with the history of Slack; etc.).

So, if @claudiu or I were suddenly floored by that mythical bus ![]() , or if our selfs unsuspectedly “sydharized”

, or if our selfs unsuspectedly “sydharized” ![]() and we deleted the forum, any staff member could almost immediately rebuild it on another DigitalOcean droplet or any other provider. But if we also deleted the content from Amazon S3 so that no one could use those backups to recover the forum, every staff member would have them in places that neither @claudiu or I would have access to.

and we deleted the forum, any staff member could almost immediately rebuild it on another DigitalOcean droplet or any other provider. But if we also deleted the content from Amazon S3 so that no one could use those backups to recover the forum, every staff member would have them in places that neither @claudiu or I would have access to.

Aditionally, as I said, we will be able to recover even from failures or errors of (or attacks against) DigitalOcean and Amazon S3.

I suppose this should be improved by having multiple owners, if possible, of the actualism.online domain (includes the forum subdomain) or by belonging to an organization like the one @Alanji proposed.

In a post addressed only to the staff (to avoid torturing even more the rest of the members ![]() ) I’ll show how to create and configure those personal accounts in MultCloud.

) I’ll show how to create and configure those personal accounts in MultCloud.